A Neutrosophic Entropy-Based Statistical Model for Uncertainty Quantification in Mixed-Type Linguistic Data: Application to University Korean Language Teaching Management Innovation Evaluation

Keywords:

Neutrosophic; Entropy; Uncertainty Quantification; Mixed-Type Datasets Neutrosophic Statistics; Truth-Indeterminacy-Falsity (T, I, F); Statistical Modeling; Information Measures; Incomplete and Ambiguous Data; Neutrosophic Logic.Abstract

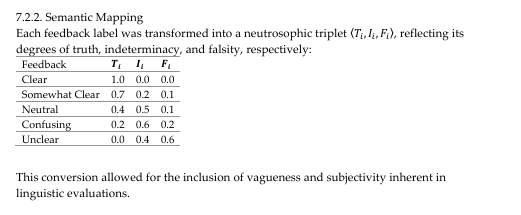

Quantifying uncertainty in datasets that combine numerical and linguistic information

poses a unique challenge to classical probabilistic frameworks, especially when

ambiguity, vagueness, and partial truth are present. This paper introduces a novel

statistical model grounded in neutrosophic entropy, built upon the triplet logic of truth

(T), indeterminacy (I), and falsity (F). The model is applied to a hybrid dataset of

University Korean language learners, integrating pronunciation scores and qualitative

feedback to evaluate cognitive uncertainty. A new entropy formulation is derived and

analyzed, with numerical experiments revealing that the neutrosophic entropy measure

more accurately captures epistemic ambiguity than classical or fuzzy entropy. Results

show distinct uncertainty profiles across learners, making the framework valuable for

educational diagnostics and linguistic data modeling.

Downloads

Downloads

Published

Issue

Section

License

Copyright (c) 2025 Neutrosophic Sets and Systems

This work is licensed under a Creative Commons Attribution 4.0 International License.